I assume that you have been lately in trouble to index your new project URLs and to get Google to do the same with those content changes made in any of your pages. Well, let me tell you that you may change your luck, as you can now use Google’s Indexing API to notify the bot about all new things on your web.

Since the moment in which request indexing from Search Console was disabled, I’ve seen many people with problems , just waiting for the bot to see those changes.

I did the same for some time, trying all kind of techniques to force indexing: starting by linking the webpage from a key element of the site or even by creating new links. But nothing seemed to work. I knew about the chance to index an URL using Google’s API but I have never needed to do so.

For this reason, I searched for information about the API and found a fairly easy option that helped me achieving much better results. In this post I will tell you about the process to sign up for the API and index your URLs using a simple Python script.

If you can’t wait, click here to see all the process.

The present and possible future of indexing

Although it is not exactly the same, we can say that indexing is somehow like crawling. Over time, Google has been improving its search system in order to simplify crawling in the entire internet. As they say, their search index contains hundreds of billions of websites, which means more than 100 billion Gb (so you can imagine the investment that this implies).

Internet has become the new library which we can all access from anywhere, but without a loan or catalogue limit. Google discloses all these webpages in order to let the user have an easier access to information, but in the 2019’s WebSpam Report we could see how more than 25 billions of these pages disclosed in a day are classified as spam.

One of Google’s highest priority objectives is to make this index as small as possible and, what is more important, that it only contains quality results. Of course, as you may have guessed, this API could work as the perfect filter to avoid crawling webpages that will not bring anything to the user, and, therefore, to make sure the indexed content can be of the highest quality.

Despite crawling is still working today as we all know, tracking all links and disclosing webpages as it goes through each of them, with an API system, the indexing of spam content would not be that easy.

Just by avoiding all this spam and saving web crawling resources, Google would not have to spend a big amount of money destined to improve their systems. On the other side, they could prevent the bot to crawl all webpages finding their URLs and spending resources on that crawling.

There are for sure many other things that could lead the way to this new form of indexing, but this topic deserves another full post. This would just be an idea of how things could change over time, but if you want more information related, I recommend you to have a look at Kevin Indig’s detailed post about the topic.

Well…let’s begin!

Indexing problems nowadays

For some time, in the SEO field we have seen how many indexing problems have taken place, even affecting pages canonicalization.

Only in the 2020 it is estimated that we have suffered up to 3 indexing bugs. The first of them took place on April 23rd and the last one was communicated via Google’s Twitter account on October 2nd.

The latter has probably been the worst for the SEO community, as we could hear the complaints of many professionals regarding this bug. Though they were not related, on October 14th this community found that the Search Console indexing option had been disabled.

Although a week after this announcement the last indexing problem was solved, the Search Console indexing option has not been enabled yet.

Google API Indexing: What you should know

For the ones who don’t know this, the indexing API allows you to notify Google about any change made on any URL, from the creation of new pages on your website to their own removal.

Literally, as they inform on their web, the indexing API has the following functions:

- Update a URL

- Remove a URL

- Get the status of a request

- Send batch indexing requests

Besides, we must add that Google reports that this API will only crawl those pages that include structured data related with “JobPosting” or “BroadcastEvent”, but since it was created it works to force indexing of any kind of URL.

How to use the Indexing API and make it work

In this section we will see the process you must follow to use Google’s indexing API step by step. Lastly, we will use a Python script in Google Colab to send the URLs in a very easy way.

Here we go!

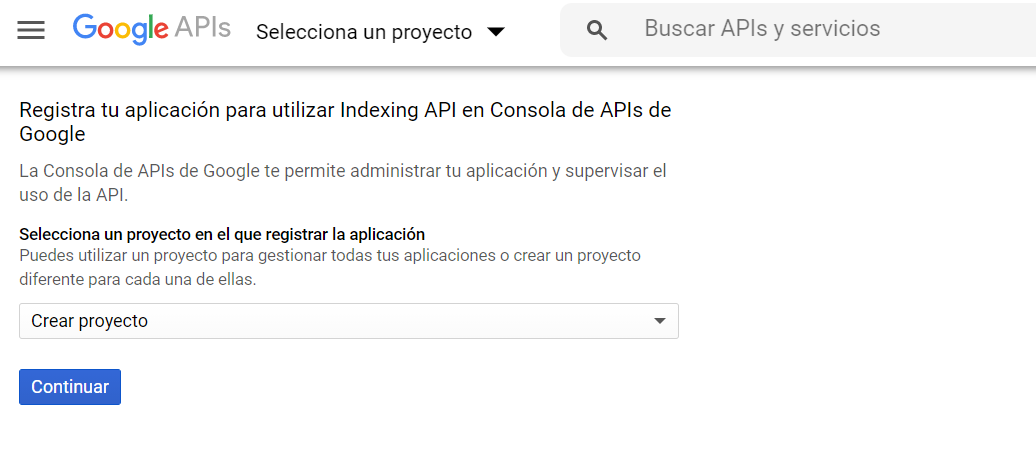

First thing to do is to create a project from Google’s APIs service, to enable the API and download the API KEY the project needs to work.

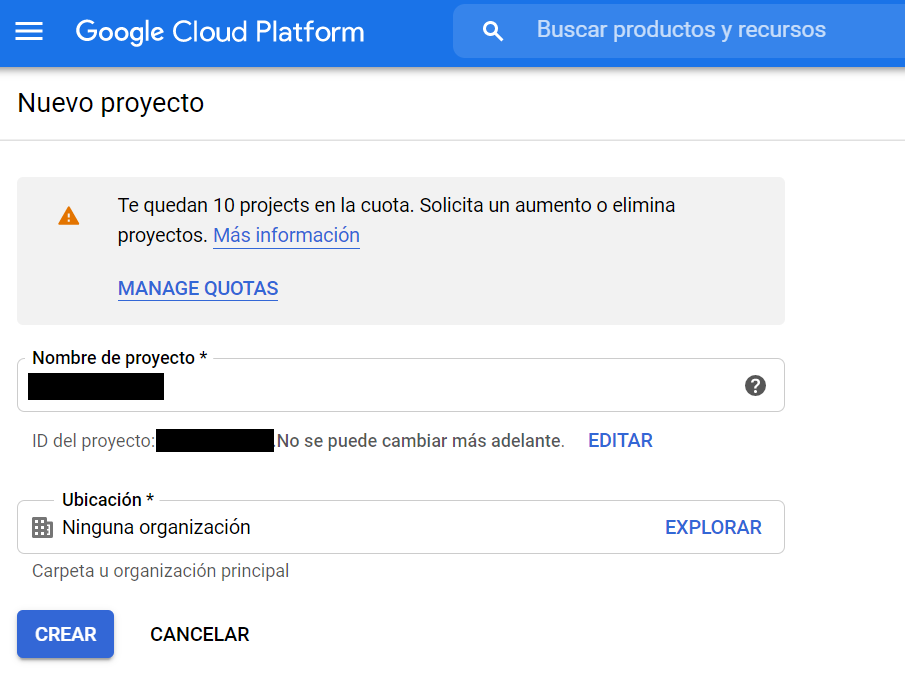

The moment you create the project, you will see a window like the following where you can name it:

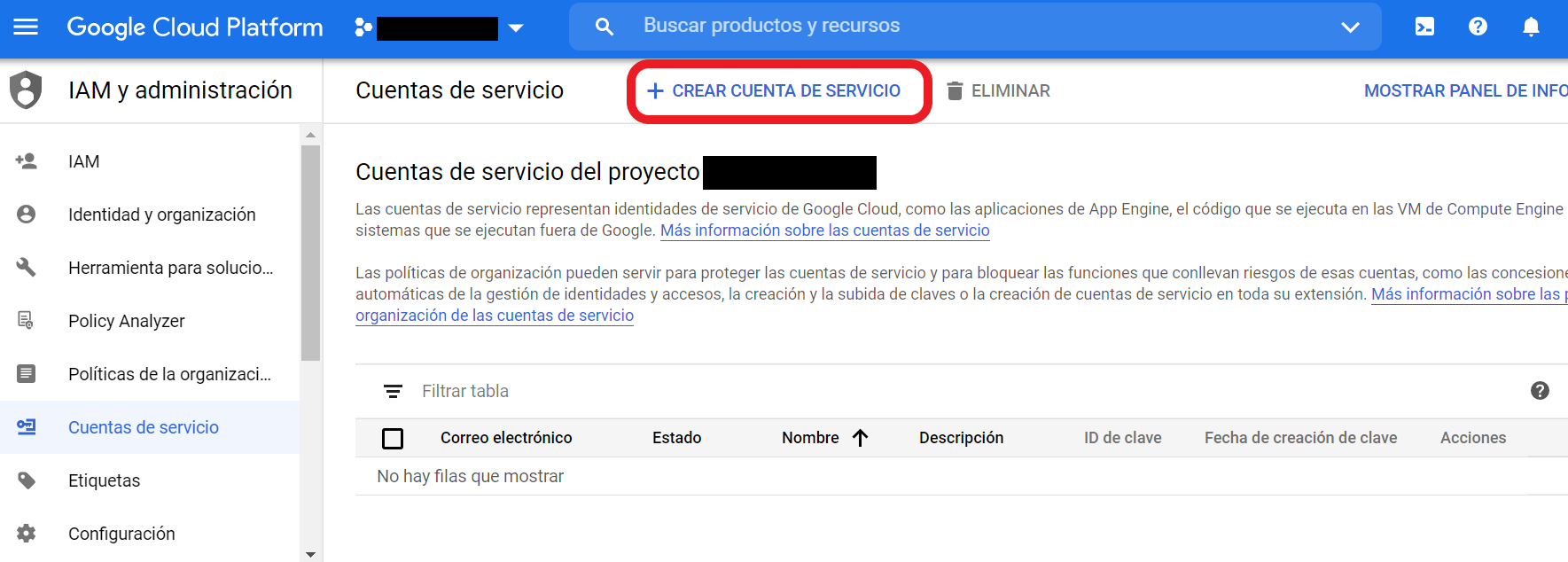

As you can see, with just one account you will have up to 12 different projects available, according to your needs. After creating one, a new window will open where you will see the service accounts associated to this new project. Here you must click on “Create service account” in order to receive your API KEY.

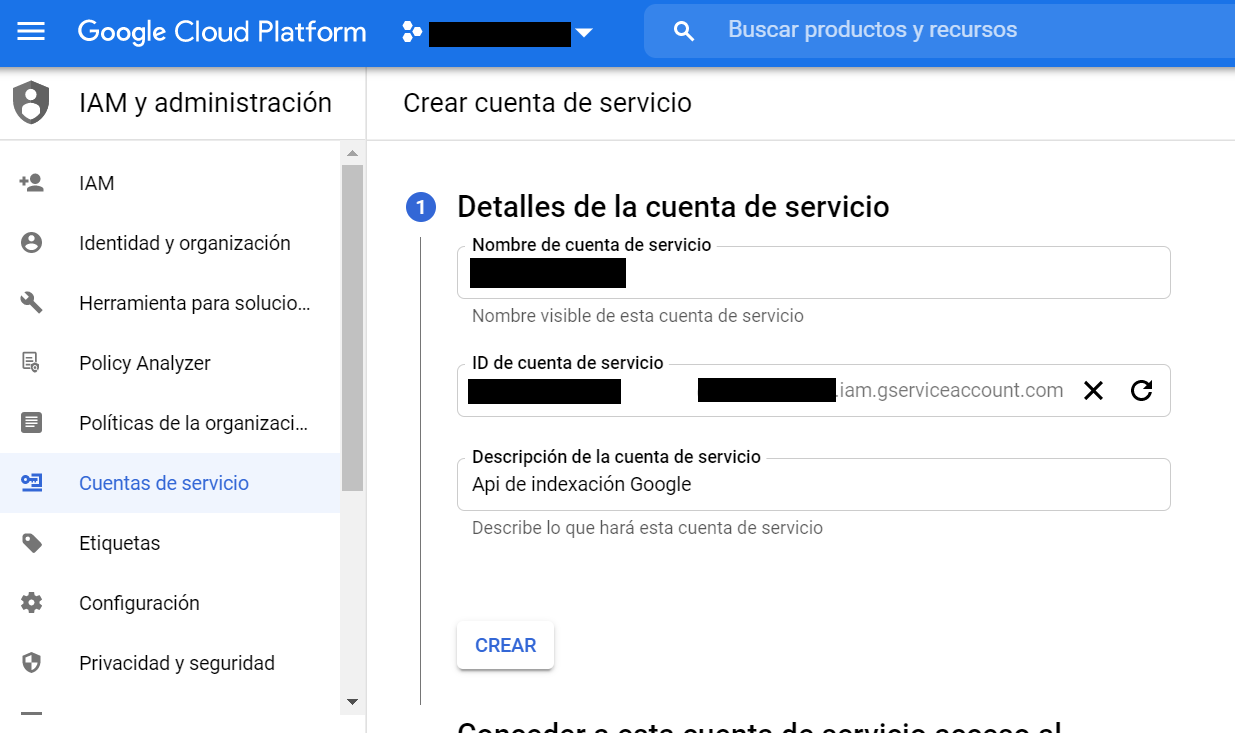

Now you will see another window where you can name your new account, so an ID with that same name will be created. You can click on “Create” to continue.

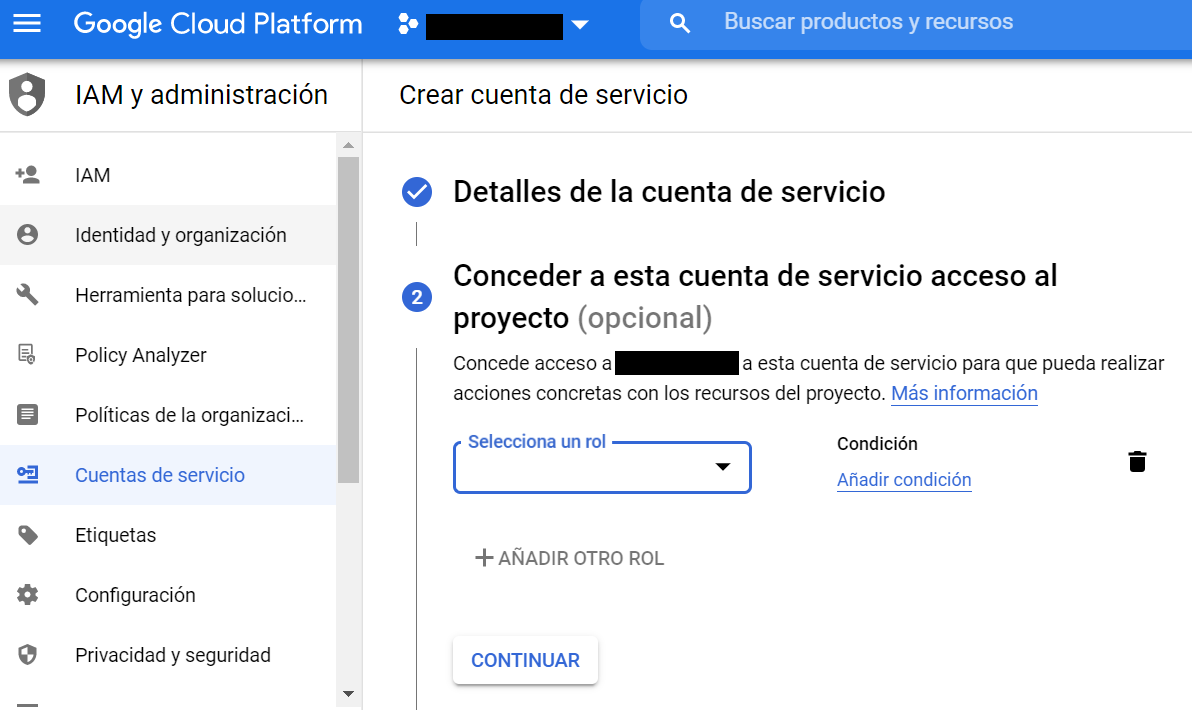

In the following step you will need to give this account service access to your project. To avoid having problems, you must give it an “Owner” role, which you can find in the “Project” section.

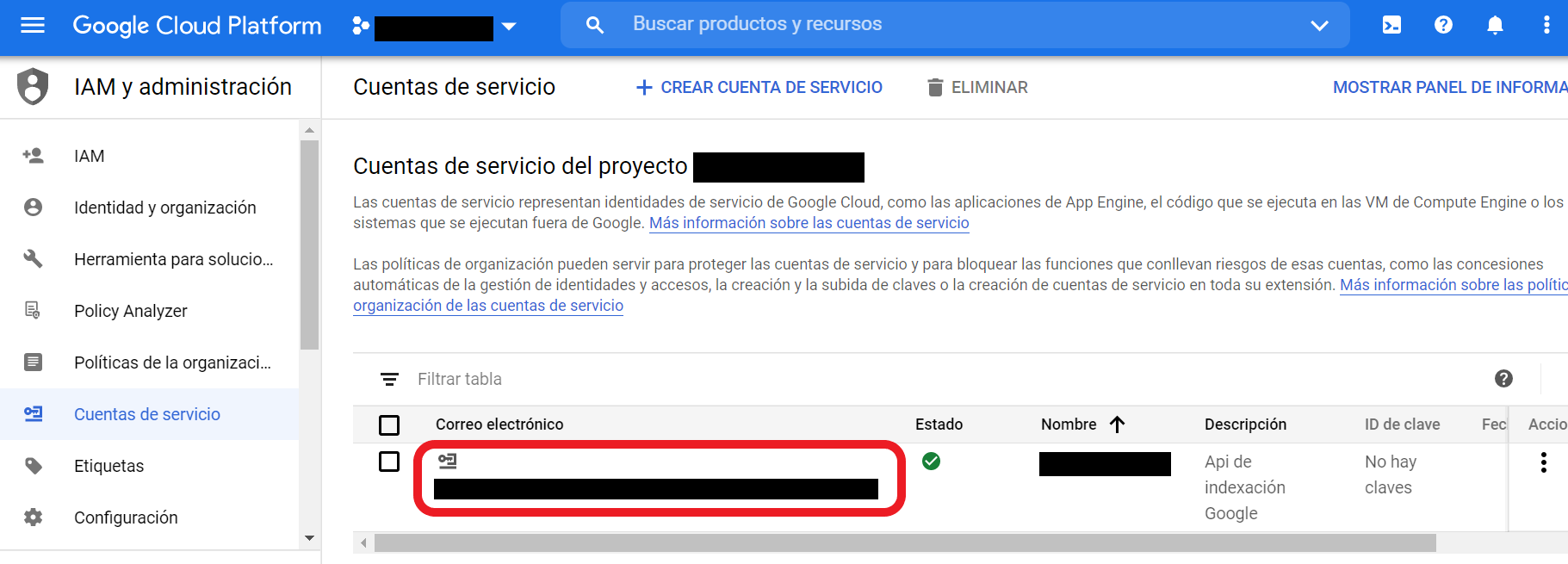

You do not need to do a thing on step 3. I just recommend you to move on without changing any parameter. The moment you have completed these 3 steps you will see the following image, where you can check that a new service account to your project has been created.

As you will see, a new email will be created and associated to this account. This email is really important, as you must add it as the owner of the Search Console account where your project web where you will use the API is verified. Bear in mind that if you want to use it for more than a website, you will need to add it as the owner of each of them.

From Search Console and in the property where you want to use the API, you must go to the “Settings” section > “Users and Permissions”.

Desde Search Console y dentro de la propiedad donde quieras utilizar la API debes ir a la sección “Ajustes” > “Usuarios y permisos”. To the right of the owner settings you will see 3 vertical dots from which you will get a new pop-up window saying “Manage property owners”.

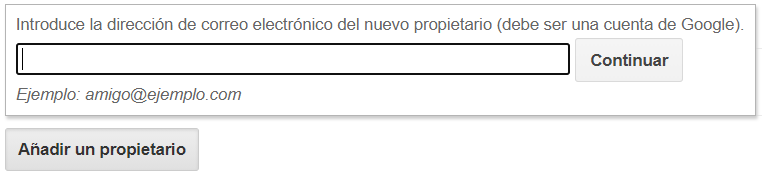

By clicking on this new window you will be taken to the old Search Console version, where you can add a new owner.

In this field you must add the email given by the service account and press “Continue”. This way you will be giving access to the API as owner, in order to index all URLs you want to by using your Search Console.

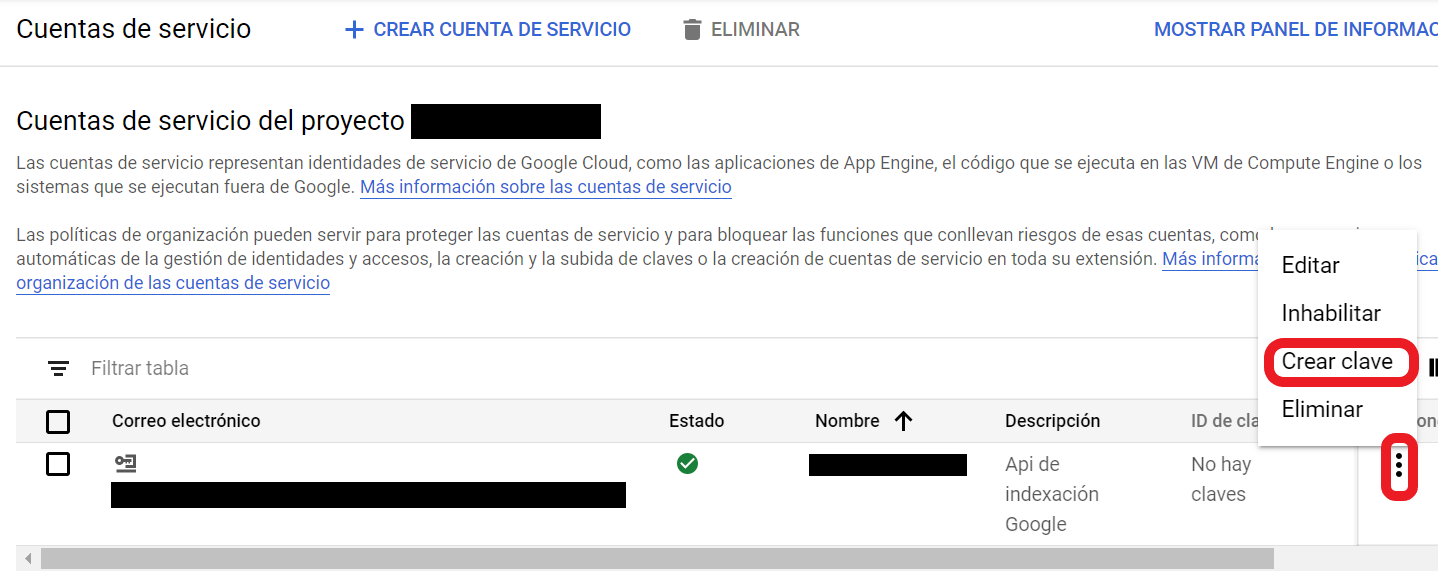

After this, you must go back to the window where your service accounts were and create the API KEY you will use to send the URLs.

Now you will see a new pop-up window where you must create your API KEY using a JSON format. When you click on “Create”, a file will start downloading to your computer: you must use it on Google Colab so you can use the API.

Finally, you must access the Google APIs library and search “Indexing API”. You will see the indexing API you must enable.

After enabling the API, using your JSON file downloaded on your computer and the email added to your Search Console, you must go to the Google Colab and create a new notebook.

Google Indexing API with Python

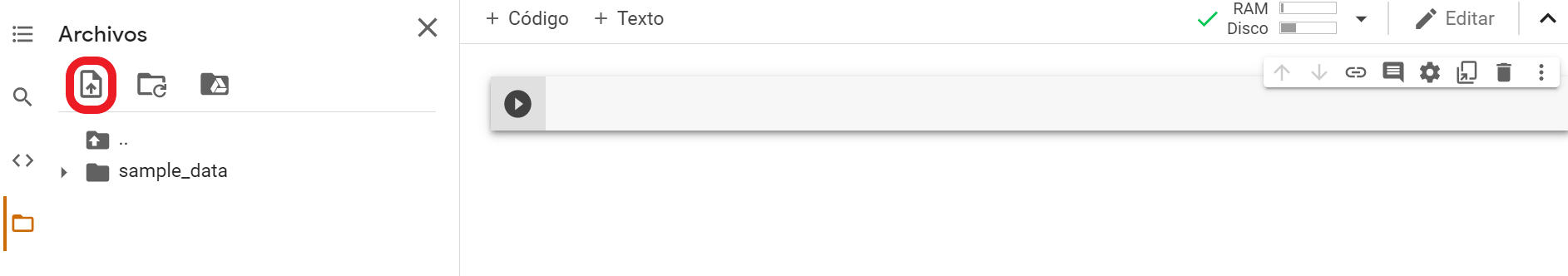

On this Google Colab new notebook you must upload the JSON file generated with your API KEY. To the left you will see there is a menu where one of the sections is called “Files”, from which you can upload any document you want to use on your script.

Once the JSON file containing the API KEY is uploaded to your new notebook, you will just need to copy the following code and complete a couple more steps to make it work.

from oauth2client.service_account import ServiceAccountCredentials

import httplib2

SCOPES = [ "https://www.googleapis.com/auth/indexing" ]

ENDPOINT = "https://indexing.googleapis.com/v3/urlNotifications:publish"

# service_account_file.json is the private key that you created for your service account.

JSON_KEY_FILE = "(RUTA DEL ARCHIVO JSON SUBIDO A TU CUADERNO)"

credentials = ServiceAccountCredentials.from_json_keyfile_name(JSON_KEY_FILE, scopes=SCOPES)

http = credentials.authorize(httplib2.Http())

content = """{

"url": "(URL QUE QUIERES ENVIAR A INDEXAR)",

"type": "URL_UPDATED"

}"""

response, content = http.request(ENDPOINT, method="POST", body=content)

print(response)

print(content)

In order to know the path of the file uploaded to your notebook you just need to go to the “Files” window to see your uploaded JSON. By clicking on the 3 vertical dots to the right you will see the “Copy” option. You must add this path to the previous code, as well as the URL you want to index.

Once you have both things, you will just need to press run to make it work. If you have followed all steps correctly you will see an answer under the script, where you should check it includes the message “Status: 200”. This way you will know it works.

There you go! Now, just by modifying the URL you want to send you can force indexing. Here is a rundown of the daily and per-minute quotas you have with the API: unless you use the batch script, it will be hard to use all of them.

Of course, this is not the only way of doing this. David Sottimano has also created a code on Node JS to use the Indexing API. And we have also seen in the last days how other SEOs of the Spanish community have shown on Twitter other methods to do this using Python, as in the case of Natzir Turrado.

As you can see, there are several ways of doing this, but personally, this is the one I find easier. I hope you liked this method and that you can use it!

More interesting resources:

- https://developers.google.com/search/apis/indexing-api/v3/quickstart

- https://www.google.com/search/howsearchworks/mission/creators/

- https://webmasters.googleblog.com/2020/06/how-we-fought-search-spam-on-google.html

- https://www.google.com/search/howsearchworks/crawling-indexing/

- https://static.googleusercontent.com/media/research.google.com/en//pubs/archive/34570.pdf