In recent weeks great media – such as the BBC and the SEO community itself – echoed the news of a new update carried out by Google on October 24 named BERT (Bidirectional Encoder Representations from Transformers).

The members of the SEO Department of Premium Leads have read the article issued by Google about the update, and they explain in detail what BERT is and how it works.

Introducing BERT: Google’s Latest Update

Pandu Nayak, Vice President of the Google Search Department, explained what it meant to incorporate BERT to improve the results to user searches. This article emphasizes the work involved in showing the most appropriate information based on what a person is looking for in Google.

We have to keep in mind that it is frequent for people to combine or delete certain words when typing in the search engine, therefore completely eliminating naturalness. Therefore, Google has chosen to implement a system that improves the comprehension of what the user really looks for.

Although advances in language comprehension are high, BERT improves the way in which the search engine understands the user. Knowing perfectly what users are looking for and displaying the most appropriate information has been one of the biggest goals for Google for years.

What is BERT?

BERT is a neural network-based technique used in training models for language representation. In its origin, it is based on randomly masking certain elements of a sentence or text in order to predict what they mean. This training is based on the cloze procedure, where certain words are omitted from a text and the person who reads it has to discover what those words are.

This model has been formed with a corpus of more than 3,300 million words, from Wikipedia and the “BookCorpus”, a very popular corpus used in this type of models, no longer available for distribution.

How does BERT work?

In order to explain how BERT’s language processing works, I think the easiest thing is to use Pandu Nayak’s own explanation in Google’s article. He refers to Bert’s functioning as “models that process words in relation to all the other words in a sentence, rather than one-by-one in order.” This type of understanding allows Google to take into account the complete context of a word based on those around it. We explain it in detail below.

As you have probably deduced, this allows the search engine to correctly understand the user’s search intent, eliminating the ambiguity that occurs in certain searches.

Why does Google bet on Bert? The importance of context in user’s search intent

When we talk about BERT, we talk about NLP or Natural Language Processing. This may sound complicated, but this type of language comprehension models have been used for years to achieve mechanisms that improve the communication between people and machines.

These models are trained from large amounts of data in order to predict connections between words or phrases. In addition to predicting word connections, they are able to recognize entities or answer certain questions.

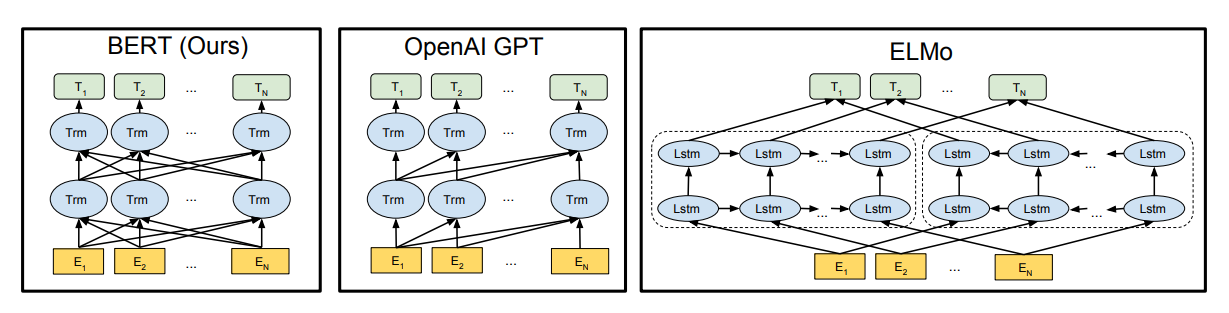

Until now, existing models (ELMo or OpenAI) were not able to improve language comprehension like BERT does. These models were based on a unidirectional analysis, which caused a limitation in their training and architecture. Other models like Word2vec also fail to achieve such a specific relation analysis, as they interpret words one by one, instead of interpreting them in a context. Instead, BERT is a much more contextual and bidirectional system, as its own name implies.

For instance:

“I made a bank deposit”

In this example, we have to look for some context for the word “bank”.

Previous models were based only in words placed to the left or right side of the word- they took “I made” in consideration, but not “deposit”, and viceversa. With Bert, both sides are taken into account (both “I made” and “deposit”), following a totally bidirectional procedure. In this way, the comprehension of certain words (such as “bank”, that can have several meanings) is improved. The graphic below illustrates this interpretation in a more sophisticated way.

On the other hand, the vice president of Google Search exposed the advantages of having Bert in the search results, since it fully understands 1 in 10 searches. So far, it only works in the United States, but many SEO professionals think it may be already working in Europe as well.

The biggest influence of this update will be reflected in long-tail keywords or searches that include prepositions such as “for”, “between”, “to” etc. Thanks to this technique, the context surrounding the words in the whole sentence can be better understood, so users can use a more natural language for their search. In addition, it also affects featured snippets, where this type of word connection will be taken into account to improve the search results.

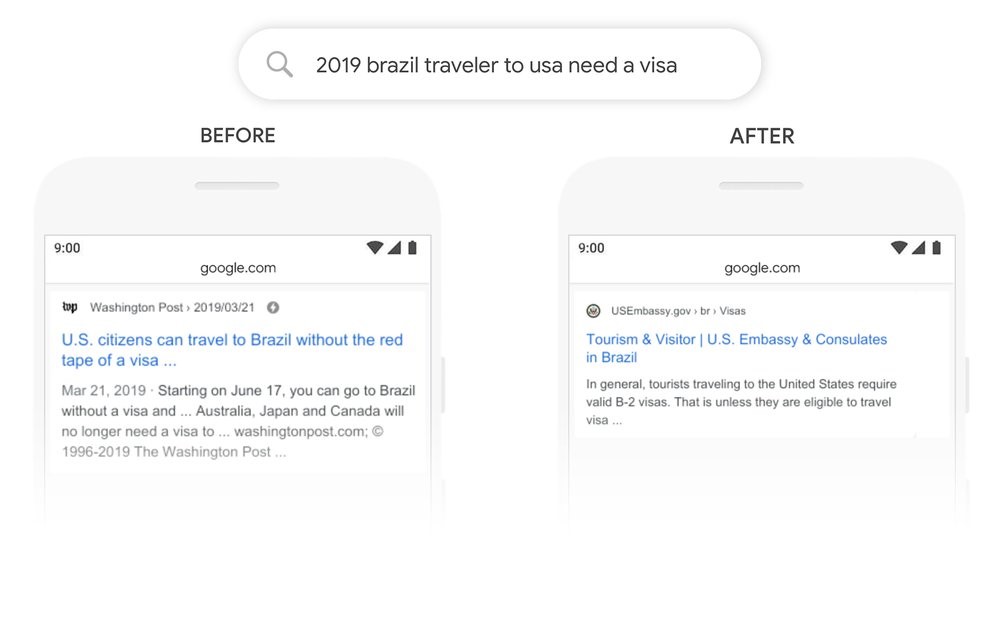

Let’s look at an example in detail:

This example presented by Pandu Nayak is the best to understand the way this system operates, where the use of prepositions and their position in the text is taken into account to correctly understand the search.

“2019 brazil traveler to usa need a visa”

As Nayak presents in the article, the words and prepositions that link this phrase are really important to understand the correct meaning of the search. The word “to” is crucial to understand that the user is looking to travel to the United States, and not the other way around. Until now, the algorithm was not able to understand this word connection and offered results of American travelers going to Brazil. With Bert, this will not happen anymore and now the results for this search are very different from what they were before the update.

Likewise, if you find a result where this does not work, keep in mind that BERT is implemented only in 1 in 10 searches.

Bert’s Influence in SEO: How Does this Update Affect the SERPs?

Google is going through constant updates and the changes in the SERPs are more regular than ever. Many SEOs miss those times where there were only one or two algorithm updates per year.

Currently, the scene is different. This year 2019 we ‘ve been through at least 8 core updates (some have not been confirmed by Google). In 2018, at least 14 confirmed updates were carried out, according to some tools that keep record of Google’s history.

Let’s go back to the main topic: How does BERT affect SEO?

On October 24 BERT began to work within the Google algorithm. Since that day, many debates on Bert’s influence have appeared on different communities, with very different opinions. The topic is made clear in the article issued by Google about the update.

BERT affects the interpretation of the queries entered by the user in the search engine, not the content of a website. This doesn’t mean there’s nothing for SEOs to do. On the contrary, we can see how Google increasingly rewards naturalness and, therefore, SEOs must give more naturalness to the websites and especially to the content. Pages repeating keywords over and over again will be forgotten, especially if they use strange combinations without prepositions.

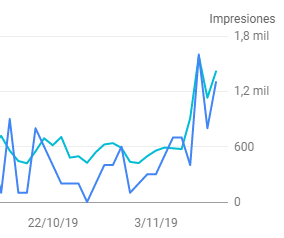

Is there a relation between Bert and Google’s Update on November 8?

On October 8, some SEO communities were talking about another update, althoug it has not been confirmed by Google.

Yes, definitely some chatter about volatility starting around 11/7. I started reviewing sites & noticed some surges starting right on 11/7… Here are some screenshots of search visibility jumping. I see this in GA as well BTW. Could also be seasonal as the holidays kick in… pic.twitter.com/p7cDpypRbK

— Glenn Gabe (@glenngabe) November 9, 2019

At Premium Leads, we have also detected changes in some websites that were not performing as expected from Google’s previous updates. Here you have some examples:

In Premium Leads, we recommend improving the semantics of the content by adding all kinds of possible variations that a user can use when performing a search. Above all, we shouldn’t forget about naturalness and always think about how a user would do it, not an SEO professional.

Have you seen any changes in the SERPs during November? How do you think Bert will affect your projects and the user’s way of searching? Share it with us in the comment section!